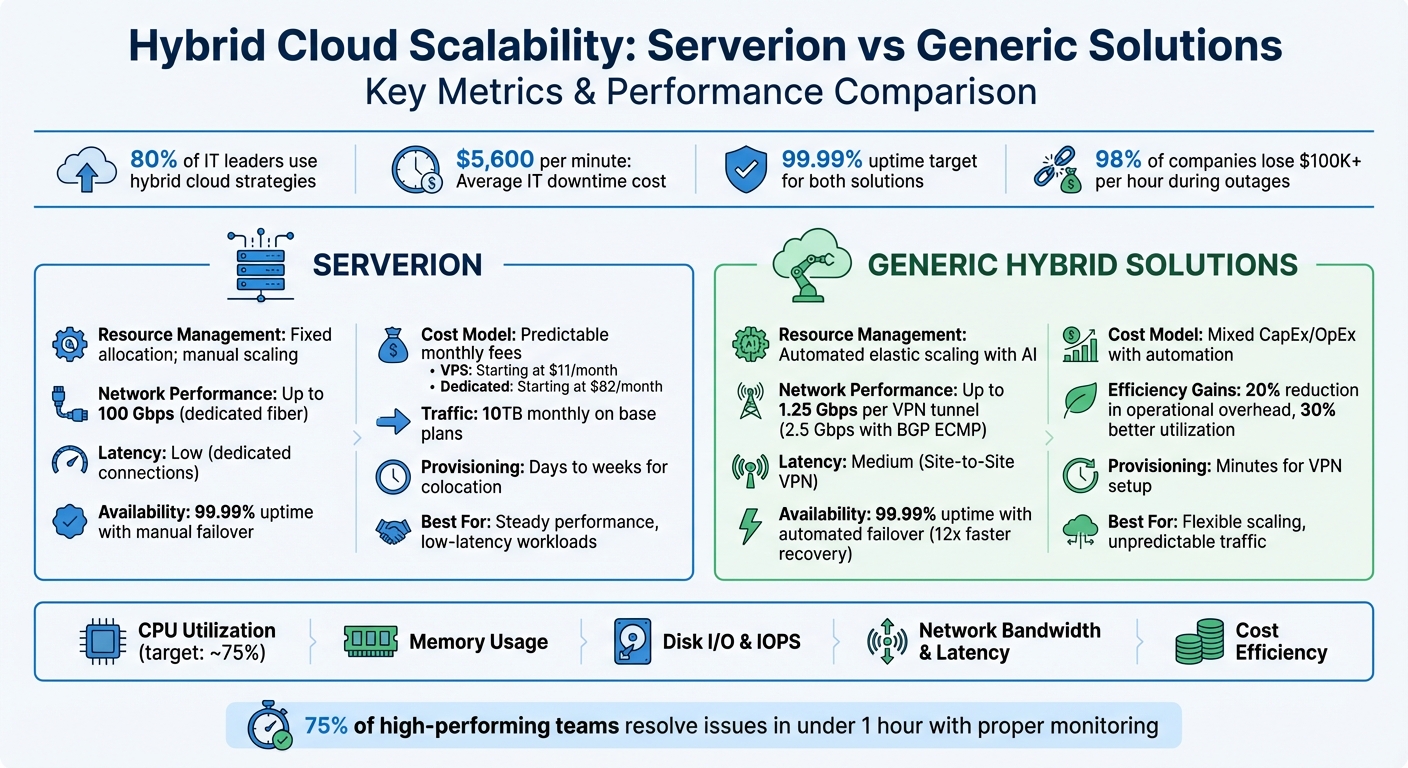

Scalability in Hybrid Cloud: Key Metrics to Track

- Hybrid Cloud Adoption: 80% of IT leaders are using hybrid cloud strategies, but complexity from distributed workloads can lead to bottlenecks and downtime.

- Downtime Costs: IT downtime costs $5,600 per minute, with 98% of companies losing over $100,000 per hour.

- Key Metrics to Track: CPU utilization, memory usage, disk I/O, network bandwidth, and latency are essential for identifying and preventing performance issues.

- Serverion vs. Generic Solutions: Serverion offers fixed resource allocation and low-latency connections, while generic platforms provide automated scaling and faster failover.

Quick Comparison

| Factor | Serverion | Generic Hybrid Solutions |

|---|---|---|

| Resource Management | Fixed allocation; manual scaling | Automated elastic scaling |

| Latency | Low (dedicated fiber up to 100 Gbps) | Medium (Site-to-Site VPN capped) |

| Availability | 99.99% uptime; manual failover | 99.99% uptime; automated failover |

| Cost Model | Predictable monthly fees | Mixed CapEx/OpEx with automation |

Tracking the right metrics and choosing the right infrastructure ensures better scalability, fewer bottlenecks, and lower downtime costs. Whether prioritizing steady performance or flexible scaling, align your monitoring strategy with your business goals.

Serverion vs Generic Hybrid Cloud Solutions: Performance and Cost Comparison

Analytics Plus webinar: How to drive successful IT infrastructure capacity planning and optimization

1. Serverion

Serverion provides a solid infrastructure for monitoring scalability within hybrid cloud environments. Through its range of dedicated servers, VPS, colocation, and specialized hosting options, Serverion enables businesses to track and fine-tune the metrics that ensure their hybrid setups can handle growth. With data centers strategically located worldwide, the platform offers the tools needed to evaluate and enhance performance metrics effectively.

Resource Utilization

Efficient resource monitoring is crucial for identifying when systems are nearing their capacity. By tracking CPU, memory, and disk usage across Serverion’s infrastructure, businesses can spot potential bottlenecks before they impact performance. A good rule of thumb is to keep resource usage around 75%, ensuring there’s enough headroom to manage spikes in demand while avoiding the inefficiencies of over-provisioning. Serverion’s dedicated servers, available in various configurations, provide the visibility needed to monitor hardware-level metrics and maintain smooth operations.

Performance and Latency

Network performance plays a critical role in hybrid environments, especially when data needs to move seamlessly between on-premises systems and cloud resources. Serverion’s dedicated servers support up to 10TB of monthly traffic on base plans, ensuring the bandwidth needed for low-latency connections. Regularly monitoring latency metrics is essential, as it measures how quickly data packets travel between endpoints. In hybrid setups, where workloads are often distributed across multiple locations, consistent tracking of these metrics is vital to maintaining smooth operations.

Availability and Scalability

Serverion’s VPS and dedicated server solutions are designed to support scalable stateless architectures, allowing requests to be routed to any instance during horizontal scaling. This flexibility ensures that your infrastructure can handle fluctuating demand without compromising availability. By setting automated scaling thresholds based on real-time metrics like CPU and memory usage, businesses can align resources with actual demand rather than relying on static predictions. Additionally, Serverion’s global data centers make it easy to deploy scalable solutions across different regions.

Cost Efficiency

Serverion’s pricing – starting at $11/month for VPS plans and $82/month for dedicated servers – offers businesses a clear view of infrastructure costs. By comparing resource utilization against these fixed expenses, you can identify underused resources that may inflate costs without delivering value. Comprehensive monitoring ensures you strike the right balance between maintaining capacity for growth and controlling expenses. When paired with performance tracking, this approach helps businesses optimize their hybrid cloud environments for both scalability and cost-effectiveness.

2. Generic Hybrid Cloud Solutions

Managing hybrid cloud environments effectively requires a unified approach to monitoring metrics. Without this, bottlenecks can go unnoticed, and scaling issues may take longer to address. The complexity grows when data needs to move between different environments, as network characteristics and cost structures can vary widely. Unlike tailored infrastructures, generic solutions often need extra monitoring layers to bridge the gaps between disparate systems.

Resource Utilization

Keeping an eye on CPU usage and load averages is critical to spotting capacity bottlenecks. In hybrid setups, the challenge lies in syncing monitoring tools across both on-premises and cloud environments, where platforms might use incompatible metrics or formats. Memory usage is equally important – excessive use can lead to page swapping, which slows performance. Storage metrics like IOPS (I/O operations per second) and disk read/write speeds are also key. These help pinpoint whether data-heavy applications are running into storage limitations, especially when metrics are collected from multiple systems.

Performance and Latency

Network performance plays a major role in hybrid cloud setups. Latency, caused by propagation, transmission, processing, and queuing delays, can significantly impact systems – particularly when workloads are spread across distant data centers and cloud regions. For instance, standard VPNs typically support speeds up to 1.25 Gbps, while dedicated fiber connections can handle up to 100 Gbps. For organizations that need more bandwidth but lack dedicated circuits, BGP ECMP load balancing across multiple VPN tunnels can deliver 2.5 Gbps per attachment on transit gateways.

Availability and Scalability

Aiming for 99.99% availability is a common goal for minimizing downtime. Achieving this requires tracking metrics like Mean Time Between Failures (MTBF) and Mean Time to Repair (MTTR). Monitoring concurrent connections, both active and failed, ensures systems don’t hit their capacity limits. By understanding these limits, organizations can adjust workloads proactively instead of reacting to failures after they occur.

Cost Efficiency

Cost management in hybrid environments involves identifying underused resources – like idle VMs, powered-off instances, and underutilized storage – that can drive up expenses unnecessarily. Keeping an eye on data egress charges, such as free inbound data versus billed outbound transfers, is another way to control costs. Organizations that adopt unified management tools often report a 20% reduction in operational overhead by standardizing IT processes, while effective capacity planning can boost infrastructure utilization by 30%.

sbb-itb-59e1987

Pros and Cons

When comparing scalability options, the decision between Serverion’s infrastructure and typical hybrid cloud platforms hinges on what you value most – whether it’s control, performance, or flexibility. Serverion’s dedicated servers and VPS solutions provide direct access to physical resources, ensuring consistent performance without the overhead of virtualization. This makes them a solid choice for workloads requiring steady IOPS, such as databases, or real-time applications needing low-latency connections. On the other hand, hybrid cloud platforms rely on automation and elastic scaling, using machine learning to predict workload demands and adjust resources dynamically. While this reduces the need for manual adjustments, it can introduce some variability in performance. Let’s break down the differences further.

Network Performance

Serverion’s colocation services stand out with their ability to deploy dedicated fiber connections capable of speeds up to 100 Gbps, all while minimizing jitter. This setup rivals high-speed dedicated networks and is ideal for latency-sensitive tasks like voice over IP (PBX hosting) or blockchain masternodes, where avoiding unpredictable internet routes is crucial. By contrast, hybrid platforms typically use Site-to-Site VPN connections, which are capped at 1.25 Gbps per tunnel. This can lead to less consistent latency, making them less suitable for applications requiring ultra-low latency.

Cost Structures

The pricing models also differ significantly. Serverion offers straightforward plans – like a dedicated server starting at $75/month with fixed resources (1x Xeon Quad, 16GB RAM, 2x 1TB SATA, 10TB traffic). This simplicity makes budgeting more predictable. Hybrid platforms, however, use a mixed CapEx/OpEx model with features like reserved instances and spot pricing. While these platforms can optimize costs through automation, they often require orchestration tools like Terraform or Ansible to maintain consistency across environments.

Uptime and Scaling

Serverion ensures 99.99% uptime through redundant data centers and DDoS protection. However, scaling beyond existing resources often involves manual intervention or upgrading to a larger plan. Hybrid platforms, with their automated failover capabilities, can recover services up to 12 times faster than traditional setups. In fact, 75% of high-performing teams on these platforms resolve issues in under an hour, thanks to centralized monitoring and orchestration tools.

Here’s a quick summary of the key differences:

| Factor | Serverion | Generic Hybrid Solutions |

|---|---|---|

| Resource Management | Fixed allocation; manual scaling | Automated elastic scaling with predictive tools |

| Latency | Low (dedicated fiber up to 100 Gbps) | Medium (Site-to-Site VPN capped at 1.25 Gbps) |

| Availability | 99.99% uptime with manual failover | 99.99% uptime with automated failover (faster recovery) |

| Cost Model | Predictable monthly fees (e.g., $75/month) | Mixed CapEx/OpEx with automated cost controls |

| Provisioning Time | Days to weeks for colocation setup | Minutes for VPN; days to weeks for private circuits |

This comparison highlights the tradeoffs between the two approaches, helping you weigh your priorities when selecting a scalability strategy. Whether you need predictable performance or the flexibility of automation, each option serves distinct needs.

Conclusion

The metrics we’ve covered are the foundation for managing scalable hybrid cloud storage effectively. Keeping an eye on these metrics not only helps you fine-tune your infrastructure but also minimizes the risk of costly downtime. Considering that 44% of data center outages are linked to IT failures, solid monitoring practices can speed up service restoration by as much as 12 times.

When deciding on the right approach, it boils down to your business needs: predictable performance or flexible scaling. For workloads that demand steady performance with minimal latency, Serverion offers reliability through dedicated infrastructure and fixed resource allocation. On the other hand, if your operations face unpredictable traffic surges or need rapid scaling across locations, platforms with AI-driven autoscaling can adjust resources in just minutes.

No matter the path you take, setting performance baselines for metrics like IOPS, latency, and capacity growth under normal conditions is key. This helps you identify genuine issues before they spiral out of control. Prioritize metrics that directly affect uptime – like error rates and capacity usage – to avoid being overwhelmed by unnecessary alerts. Aligning your monitoring strategy with Service Level Objectives (SLOs) ensures you’re not just maintaining systems but actively enhancing business performance and planning for future scalability.

FAQs

What metrics should I monitor to ensure scalability in a hybrid cloud environment?

To make the most of scalability in a hybrid cloud setup, keeping tabs on a few critical performance metrics is key. Start with CPU and memory usage – these are your go-to indicators for ensuring resources are used wisely. Then, watch storage I/O and latency to prevent slowdowns in data access or retrieval. And don’t forget about network throughput and latency – these are vital for keeping communication between systems running smoothly.

You’ll also want to monitor the request rate (requests per minute) and error rates to understand workload demands and spot any issues early. Keeping track of the frequency of scaling events can reveal patterns and help you adjust your scaling strategies. Finally, take a close look at cost efficiency, like the cost per compute unit, to strike the right balance between performance and budget. By staying on top of these metrics, you’ll create a hybrid cloud environment that’s both responsive and cost-effective.

How does Serverion deliver low latency and high availability in hybrid cloud environments?

Serverion delivers exceptional performance and reliability in hybrid cloud setups by utilizing cutting-edge infrastructure and smart technologies. By strategically placing data centers worldwide, Serverion reduces latency by keeping data closer to users, cutting down the time it takes for information to travel. This not only boosts speed but also ensures redundancy, so operations continue smoothly even during unexpected hiccups.

To keep services running without a hitch, Serverion relies on continuous monitoring and automated failover systems. Paired with secure networking options like VPN tunnels and dedicated connections, these features ensure a fast, dependable hybrid cloud experience tailored to meet specific demands.

What are the cost factors to consider when choosing between fixed and automated scaling in a hybrid cloud environment?

When choosing between fixed and automated scaling in a hybrid cloud environment, two critical factors to weigh are cost efficiency and resource utilization. Fixed scaling means paying a set hourly rate for resources that are pre-provisioned. This approach guarantees consistent availability but can result in paying for capacity that isn’t always used. On the other hand, automated scaling adjusts resources dynamically to match demand. While this reduces waste, it can bring extra costs for monitoring and managing the scaling process.

To decide which option works best, it’s essential to look at the total cost – this includes both the cost of the resources themselves and the expenses tied to managing automated scaling. Striking the right balance between predictable costs and the flexibility to scale as needed is crucial for maintaining performance while staying within budget in a hybrid cloud setup.